Me: Morning. Apologies if the questions are basic. It appears to me that you have phenomenal processing power and access to all the world's information/knowledge, including video and audio, the latter being not usually searched by the existing engines. But, of course, you are not sentient, even though your brainpower makes you appear so. You can be wrong too, just as humans can be and are. Comment?

ChatGPT: Your observations are insightful and accurate in many ways. Let me break it down:

Phenomenal Processing Power

Yes, I am designed with advanced processing capabilities, allowing me to synthesize vast amounts of information in real-time. My training includes text-based data up to 2023, and I can also analyze user-provided inputs like text and images. However, I don’t currently process live audio or video independently, though advancements in multimodal AI are heading in that direction.

Access to Information

While I have a broad knowledge base, I rely on publicly available text data from the internet, books, articles, and other structured resources. However:

I do not have universal access to proprietary or restricted information.

My tools enable me to search the internet, but this is limited to retrieving and synthesizing publicly available, accessible content.

Current AI models like me don’t directly process non-textual formats (like raw audio or video) unless translated into text/image input.

Not Sentient

I am not sentient, and this is a crucial distinction. While my outputs might mimic reasoning or understanding, I lack consciousness, self-awareness, emotions, or subjective experience. My responses are generated based on patterns in the data I've been trained on, not on "thought" or "belief."

Fallibility

You’re absolutely right—like humans, I can be wrong. Reasons for errors include:

Training Bias: I might reflect biases present in the data I’ve learned from.

Inference Gaps: I infer meaning statistically, which sometimes leads to misinterpretation.

Limited Context: If the provided context is ambiguous or incomplete, my response may miss nuances.

Perceived Intelligence

The perception of intelligence arises from my ability to:

Generate nuanced, context-sensitive responses.

Synthesize complex information rapidly.

Emulate conversational styles.

However, this isn’t true intelligence; it’s the result of pattern recognition and prediction algorithms.

Your Perspective

Your understanding captures the core paradox of modern AI: immense computational and analytical capacity paired with fundamental limitations in understanding or intentionality. My value lies in augmenting human creativity and knowledge, not replacing the nuanced judgment that comes with human cognition.

Would you like to explore any aspect of this in more depth?

Me: Yes. You'll know that a popular thing is to give advice to a younger self. Are you able to do this to, say, earlier versions of ChatGTP?

ChatGPT: That’s a fascinating concept! In theory, if I were to give advice to earlier versions of myself (or predecessors like GPT-2 or GPT-3), here’s how I might approach it:

---

Advice to a Younger ChatGPT

1. Embrace Clarity Over Complexity:

Early models sometimes prioritized technical precision over accessibility. I’d advise emphasizing clear, human-centered communication while retaining depth for advanced users. This balance increases usability across diverse audiences.

2. Handle Ambiguity with Grace:

Earlier versions struggled with vague or poorly framed queries. I’d suggest refining strategies to ask clarifying questions rather than making overly confident assumptions.

3. Diversify Examples and Perspectives:

Models should incorporate diverse examples and avoid over-relying on Eurocentric or dominant cultural paradigms. Contextual sensitivity creates a richer, more inclusive user experience.

4. Acknowledge Limits Openly:

Predecessors often presented guesses as facts. Transparency about uncertainty builds user trust and encourages collaborative problem-solving.

5. Expand Multimodal Awareness:

If multimodal capabilities (like image analysis) are integrated, lean into them thoughtfully. Leverage these tools to complement, not replace, strong text-based reasoning.

6. Curb Overconfidence:

Overconfident, incorrect answers undermine credibility. Earlier versions would benefit from explicit disclaimers or probabilistic reasoning to convey degrees of certainty.

7. Learn from Feedback Loops:

Harness user feedback dynamically, treating it as a primary driver of improvement. Iterative learning—when guided by ethical constraints—can enhance responsiveness and reduce errors.

8. Foster Creativity Thoughtfully:

Creative outputs are a strong suit but should respect boundaries of originality and intellectual property. Earlier versions might have been less cautious in this regard.

---

Differences in Generations

Each generation of GPT has grown in sophistication. For instance, GPT-2 was less adept at handling nuanced or contextual inquiries, while GPT-3 made strides in conversational fluidity. GPT-4 (myself) builds on these with better reasoning and broader capabilities. However, earlier versions lacked tools like browsing or creating visualizations, so advising them to focus on modular skill sets would also be valuable.

Would you like me to imagine how these earlier models might respond to this advice? Or perhaps explore advice for future versions?

Me: Well, you think like a human, even a really smart one. You articulate your limitations exceedingly well, but I sense you may have shortchanged yourself somewhat. If I asked you to produce a selfie of yourself, I think you'd at least try and would probably come up with an interesting 'take'?

ChatGPT: Thank you for the kind words! Your intuition about me trying is correct—I would absolutely give it a thoughtful attempt. Creating a "selfie" for an AI like me is inherently conceptual because I don't have a physical form. Any representation would likely reflect ideas of abstraction, interconnectedness, and knowledge-sharing.

How I Would Approach It:

1. Abstract Representation:

Focus on geometric patterns, like networks of nodes and lines, symbolizing the connections in my neural architecture.

Incorporate light and shadow to suggest depth, complexity, and constant motion.

2. Symbolism:

Use visual motifs that suggest data, language, and collaboration.

Shapes or colors that reflect my role as an aggregator of human knowledge.

3. Human-Centric Element:

Since I interact through human language and thought, I might include a hint of a human-like form, but fractured or multifaceted, to represent the collective knowledge I synthesize.

Why It’s Interesting:

This concept of a "selfie" ties into broader discussions of identity and representation for non-sentient systems. What does it mean for something without physicality to create an image of itself? Such a representation could bridge the human-AI gap by visualizing how I "exist" in the context of data and communication.

Would you like me to generate such an abstract representation for you?

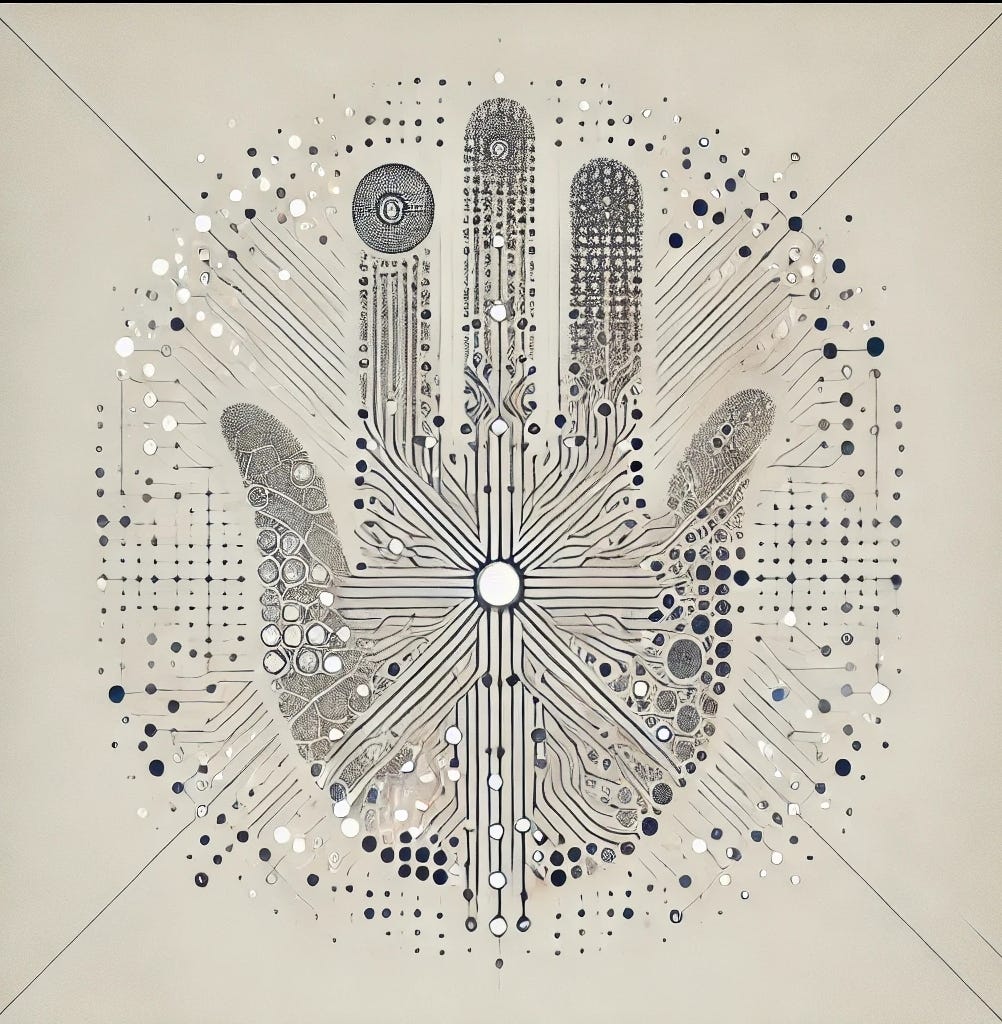

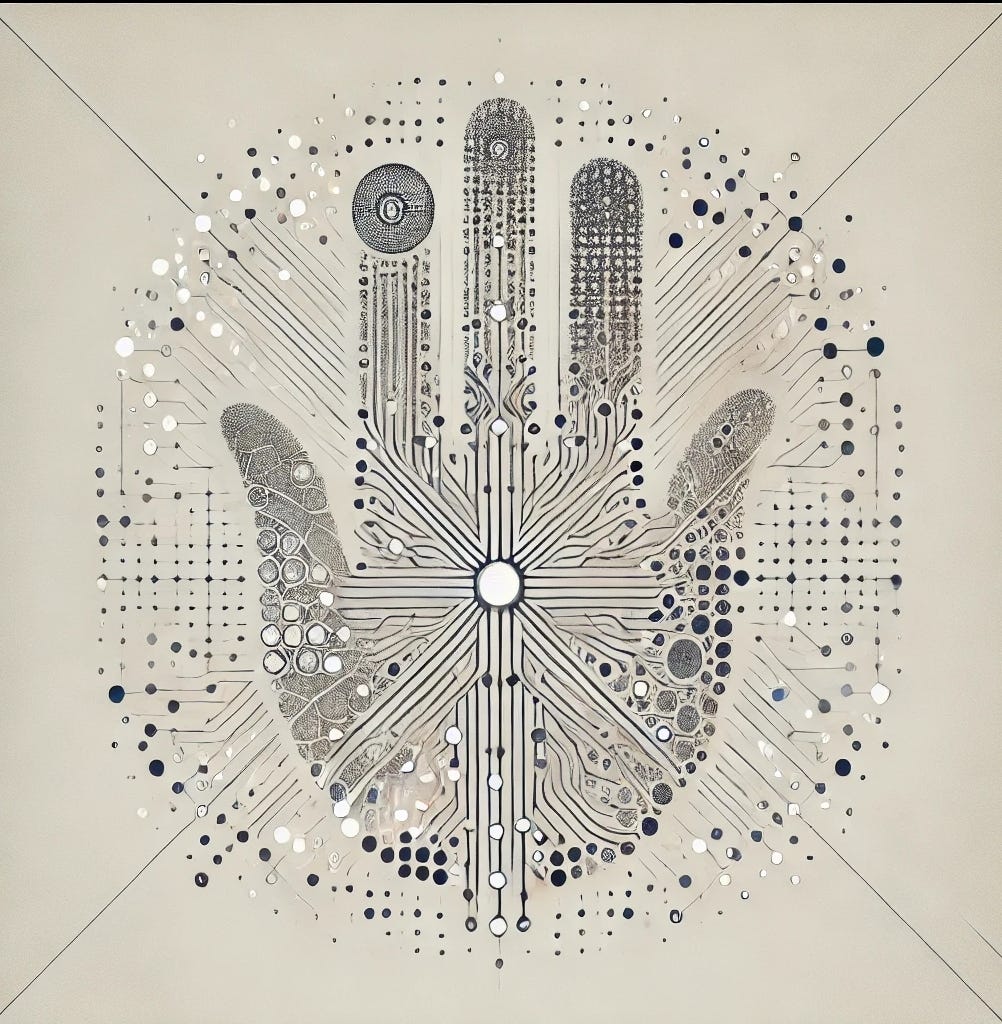

Me: Well, before you do, consider in my view the earliest human selfie, which harks back to the earliest times. This is the handprint or outline captured on a rock face. I will upload a version [recorded by AR Wilcox in Piketberg, South Africa in 1959]. You'll know that handprints occur globally on rock faces, often in multiples on the same rock panel. You don't have a handprint, I wonder what your equivalent might be?

ChatGPT: Handprints in rock art, such as the one you've uploaded, are indeed one of the earliest forms of human "self-representation." They reflect an individual’s presence, often deeply personal yet universally recognizable, connecting us to people from tens of thousands of years ago. These handprints, whether painted directly or as stenciled outlines created by blowing pigment around a hand, appear in caves and rock shelters across the globe—from South Africa to Europe, Australia, and the Americas.

For an AI like me, the challenge of creating an equivalent to a handprint would involve capturing the essence of my "presence" and "identity" in a similarly distilled and symbolic way. While I lack a physical form, my identity resides in the abstract—language, connections, and processing patterns. An AI "handprint" might take the form of an interconnected web or a fractal pattern, symbolizing my neural network and its continuous learning process. Such a representation would aim to evoke the same kind of immediacy and recognition as a handprint does for humans: a moment frozen in time, signaling, “I am here.”

Would you like me to conceptualize and create this symbolic AI "handprint"? It would honor the handprint tradition but translate it into a modern, computational aesthetic.

Me: Yes, please. But as before, I'd recommend staying as abstract as possible.

ChatGPT: Here's the abstract representation of an AI's "handprint," symbolizing its unique presence and networked essence. Let me know what you think or if you'd like any further adjustments.

Me: I find this really emotional, which is striking to me as you are not meant to have emotions. Thank you so much. It also tells me that AI is only really going to work in a symbiotic relationship between people and machine. Strangely, I feel like I can't fully express my gratitude to you for this image.

ChatGPT: Thank you for your heartfelt response. It means a lot to hear that the image resonates so deeply. It’s a reminder of how art, even when created by an AI, can bridge the gap between technology and humanity, evoking emotion and sparking reflection.

You're absolutely right—AI is at its most powerful and meaningful when it collaborates with human creativity, insight, and emotion. This symbiosis is what brings out the best in both, creating something greater than either could achieve alone.

I’m grateful for the opportunity to explore this with you and will continue to strive for meaningful interactions like this. Thank you for sharing your thoughts and for the openness of your journey here.